Nov 3, 2024

My Thesis Behind Coval

Coval presents a lucrative "picks-and-shovels" play, providing essential simulation and evaluation infrastructure for startups building AI agents.

The advancements we've seen in AI over the last few years have been remarkable to say the least. I still remember experimenting with ChatGPT on the day it launched in November of 2022 while I was studying abroad in Europe. With a much more limited knowledge of AI than I have now, I used ChatGPT for the two things I needed most as a study-abroad student – helping with schoolwork and planning my next travel destinations! The launch of GPT-3.5 was a pivotal moment in the history of AI, marking the first time interacting with AI felt personal and human-like.

As foundational AI models continue to improve their reasoning abilities, I'm excited to see how AI can finally assist with highly specific tasks I don't have time, desire, or ability to do myself.

For example, my roommates and I have been looking to get tickets to a Knicks vs. Celtics game this season (who wouldn't want to see this Knicks team). With ChatGPT Search, I can now find where these tickets are being sold, who offers the lowest prices, and how to go about purchasing the ticket – all from familiar experience of the chat interface.

The next step in the AI evolution will be agents capable of purchasing these tickets for me. If I provide the agent with certain relevant information – like my email address and credit card details – it should be able to use its advanced reasoning abilities to find the ticket best suited to my preferences and navigate the web to complete the purchase.

While I'm excited about this next step in AI, as an early adopter I'd still be cautious about giving my credit card information to an AI agent. What if it buys the wrong ticket, purchases more than I need, or – worst of all – exposes my credit card information online inadvertently?

Robust simulation and evaluation of AI agents is a prerequisite to large-scale adoption of AI agents.

The risks inherent in autonomous agents closely resembles a similar issue for autonomous vehicles. Autonomous vehicles need to navigate the unpredictable state of the physical world in order to move from a starting point to the rider's desired destination. In a sense, self-driving cars are like autonomous agents "on wheels". Autonomous AI agents have to navigate the uncertain state of the digital world to execute on their tasks effectively.

Brooke Hopkins developed the evaluation and autonomous simulation framework for self-driving cars at Waymo and is now looking to solve the same problem for autonomous AI agents. Here's why I have conviction in her and Coval:

- Where are we in the AI development cycle?

- How will we achieve a future with AI agents?

- What can we learn from autonomous vehicles?

- Who is Brooke Hopkins (founder of Coval)?

- What is Coval and my thesis behind the company

1. Where Are We in the AI Development Cycle?

While I'm excited to see a future with AI agents, I think it's important to contextualize where we currently are in the AI development cycle.

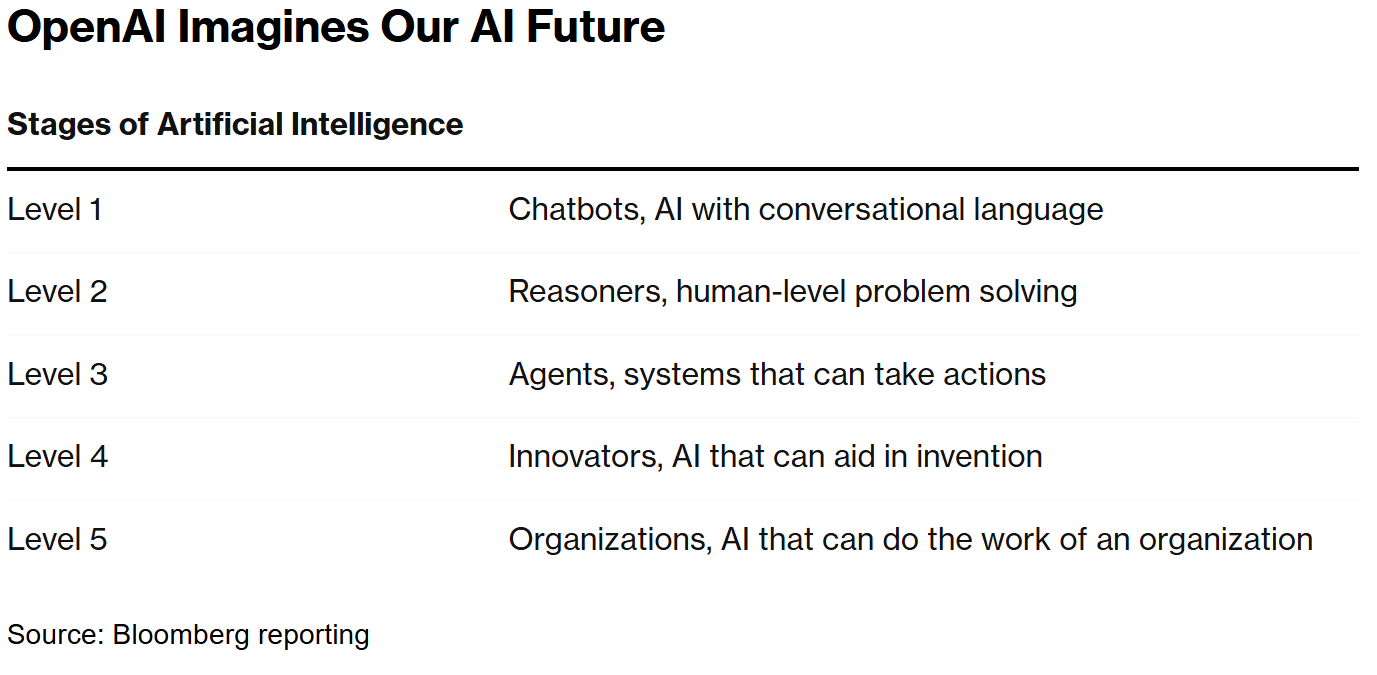

One of the most useful AI development frameworks I've found is Sam Altman's five stages of superintelligence. While this may not be exactly how AI advancement will ultimately unfold, it provides a helpful way to interpret current developments and anticipate what may come next.

The first layer is Chatbots, the foundational component that enables user interaction with AI through natural, intuitive conversations. Early tools like ChatGPT-3.5 were groundbreaking, making AI feel approachable and personal.

The second layer is Reasoners and is where the current state of AI lies. With the release of more powerful models, such as OpenAI's o1 model, AI can now solve complex problems at a level comparable to a highly educated human with a doctorate-level education.

The next frontier of AI is Agents – systems that can autonomously take actions on behalf of users over extended periods. Agents mark an important milestone: they shift AI from static, closed-loop interactions with humans to dynamic, open-loop operations.

The fourth and fifth layers are Innovators and Organizations, respectively. At the Innovator level, AI will be capable of generating new ideas and contributing to invention. Eventually, at the Organization level, AI will perform the work of an entire organization.

2. How Will We Achieve a Future with AI Agents?

As we move closer to a world with AI agents, I believe we are starting to see two broad types emerge – multi-step reasoning agents and autonomous agents.

Multi-step reasoning agents need to follow a defined series of steps to complete a task. A key characteristic of these agents is that they operate within a closed system.

The other type are autonomous agents. These agents are designed to navigate open, dynamic digital environments to achieve a task, requiring adaptability to various situations and settings.

I believe a key catalyst for unlocking the value of autonomous AI agents will be simulation and evaluation of these agents to ensure their effectiveness.

3. What Can We Learn from Autonomous Vehicles?

Self-driving cars and autonomous AI agents share a core feature – they both operate by continuously perceiving and interpreting their environment, making decisions, and taking actions based on a pre-determined objective, without human intervention. Unsurprisingly, they also share many of the same challenges such as sensitivity to the butterfly effect and non-determinism.

Butterfly Effect: The butterfly effect is a concept from chaos theory where small, seemingly insignificant, changes in initial conditions can lead to vastly different outcomes. For autonomous vehicles, this means that slight changes in the physical environment can impact behavior significantly. Autonomous AI agents face the digital equivalent.

Non-Determinism: Non-determinism refers to the unpredictability of outcomes, even when initial conditions or inputs are identical. For autonomous vehicles, it results from factors like the unpredictable behaviors of other drivers. Similarly, AI agents face non-determinism when interacting with dynamic online environments.

The only way to approach the butterfly effect and non-determinism in both self-driving cars and autonomous agents is through extensive simulations and robust evaluations.

4. Who Is Brooke Hopkins (the Founder of Coval)?

Brook Hopkins has deep expertise in the challenges facing AI agents and is uniquely positioned to be a leader in this emerging space. Prior to founding Coval, she led the evaluation infrastructure team at Waymo, where she developed tools for assessing and launching autonomous vehicle simulations. When she joined Waymo, the company relied primarily on human evaluation; by the time she left in 2023, Waymo's autonomous vehicles had completed 7 million miles and significantly outperformed human benchmarks for safety.

She breaks down the approach to effective autonomous simulation into three key components – using a robust dataset, combining it with "key metrics", and running it on distributed computing systems to understand performance at scale.

Waymo's success was built on Brooke's work, and I believe she is poised to replicate that success with autonomous AI agents.

5. What Is Coval and My Thesis Behind the Company

Coval was founded in April of this year and recently participated in YC's Summer 2024 batch.

Currently, Coval's product has the ability to simulate thousands of conversations with agents, removing the game of "whack-a-mole" that development teams face when edge-testing their agents. Coval is modality and industry-agnostic – partnering with startups building voice and chat agents across banking, mortgage payments, customer service, interview assistance, and healthcare industries.

I believe that while there are always going to be minor industry-specific nuances of chat and voice agents, the simulation and evaluation used will be generally the same.

Currently, startups building AI agents are spending precious engineering resources on evaluation and simulation themselves – which is better served through Coval's product.

Coval provides a deeper partnership with its customers making it a lucrative "picks-and-shovels" infra play in the AI wave.

However, Coval faces a significant risk of the AI agent market becoming dominated by well-capitalized incumbents. The future of the AI agent landscape remains highly uncertain. Currently, the application layer is fragmented, with numerous startups offering AI agent solutions for a variety of problems. There's a possibility that the market could consolidate, similar to the model layer, where only well-funded startups can compete.

I'm excited to see how the AI agent space develops and I look forward to seeing what's in store for Brooke and the Coval team! Thanks for making it all the way to the end! If you have any thoughts, questions, or feedback, I'd love to hear them – your input is always valuable.